![]()

STPCON 2016

flood.io

bit.do/flood_stpcon

Extinction Event

Expectation vs Reality

Expectation

Reality

@tim_koopmans

CTO / Founder Flood IO

Cow Farmer, Child Wrangler & Recovering Load Tester

How to scale from zero to one million requests per second

Be Prepared

- Model

- Measure

- Build

- Decide

A load testing platform

"That doesn't get in the way of load testing"

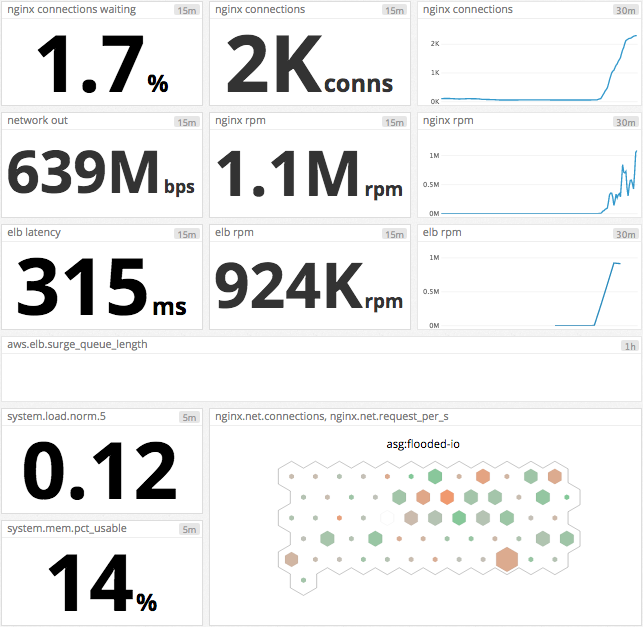

A Platform That Scales

"Distributed, Loosely Coupled, Shared Nothing"

It Scales Because

Scale on Demand

Fraction of the cost

"Pay for the infrastructure you use"

Read why we think paying per VU is broken

en.wikipedia.org/wiki/OODA_loop

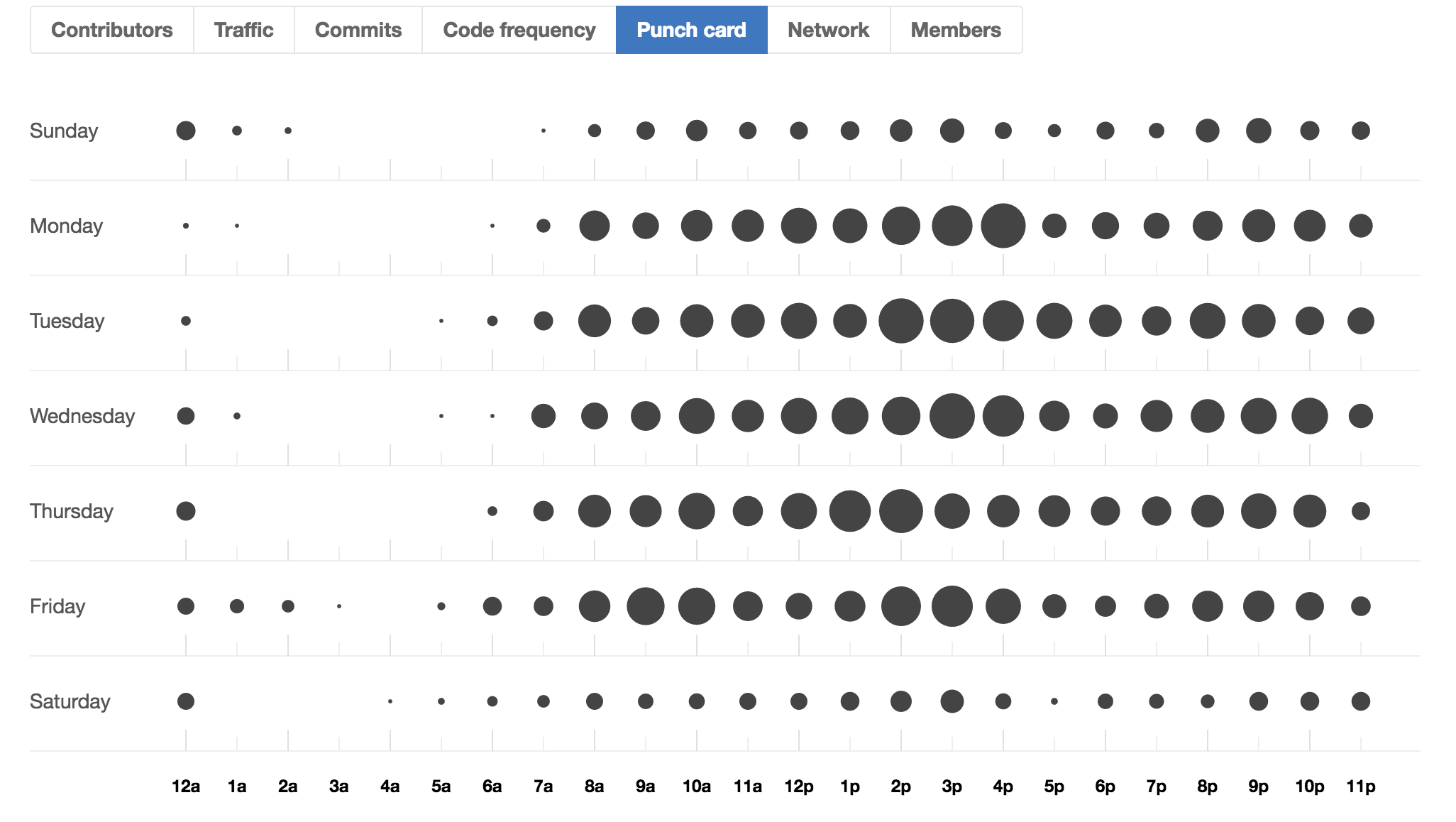

Simulation Model

"A good simulation model is worth a thousand tests"

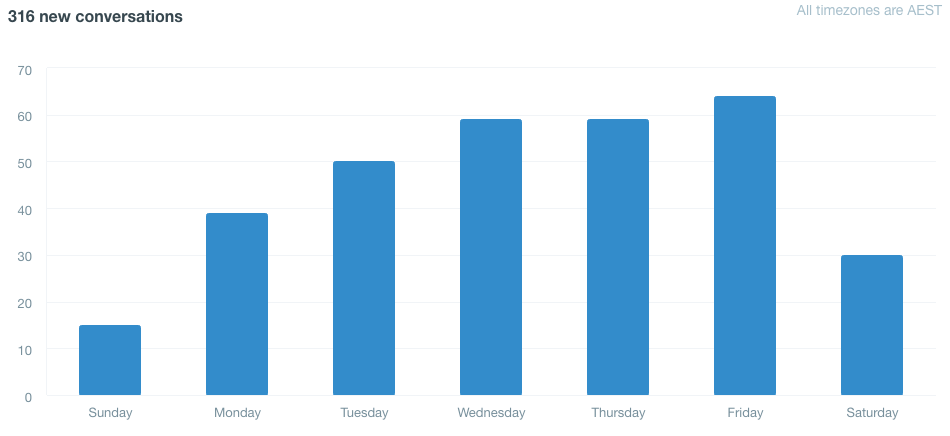

"Last night we had 180K uniques doing something in the order of 500K requests per minute BUT the business wants us to test up to 1M requests per second for the next big sale event"

Response Time

Concurrency

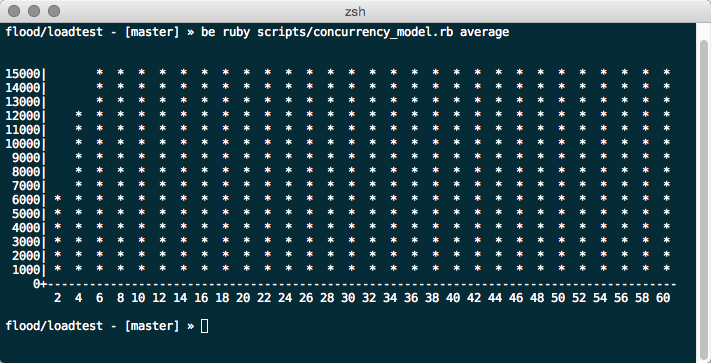

An "average" way to estimate

180,000 uniques

--------------- = 15,000 concurrent users

( 60 minutes / 5 minutes)

Estimating concurrency

An "average" way to estimate

Estimating concurrency

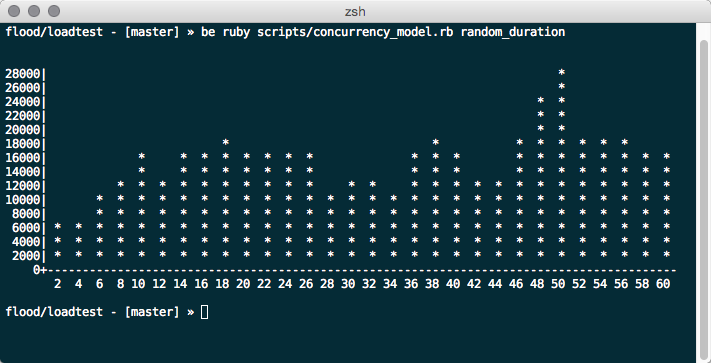

Random session duration

Estimating concurrency

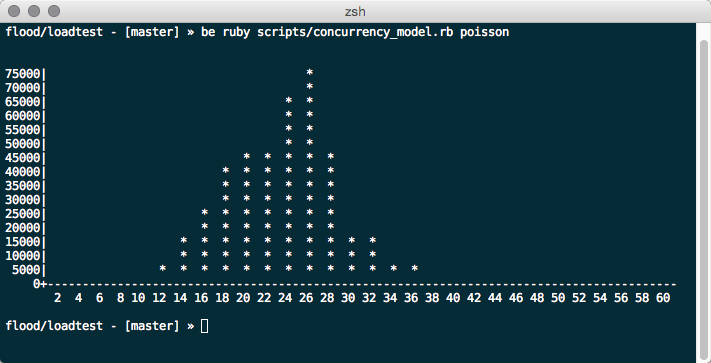

Poisson Distributed

Estimating throughput

Another "average" method

500,000 requests per minute

--------------------------- = 33 rpm per user

15,000 users

Estimating throughput

We can start to validate business targets of 1M rps 😲

60,000,000 requests per minute

------------------------------ = 4,000 rpm per user

15,000 users

OR maybe ...

60,000,000 requests per minute

------------------------------ = 1.8M concurrent users

33 rpm per user

Uniform Distributions

"They don't exist"

Exploratory Targets

- concurrency 15K, 30K, 75K, 2M

- throughput 1..50 rpm per user

- response time 200..2000 ms

Measure

- Response Time

- Concurrency

- Throughput

- Errors

- Infrastructure / Application

Our Mission

Make it simple

"Application Performance Management is a vast ecosystem"

Production API

Build & Code

https://github.com/flood-io/loadtest

├── Dockerfile

├── Makefile

├── config

│ ├── default.vcl

│ ├── limits.conf

│ ├── nginx.conf

│ ├── supervisord.conf

│ └── sysctl.conf

├── scripts

│ └── jenkins.sh

├── terraform

│ ├── api

│ │ ├── main.tf

│ ├── asg

│ │ ├── cloudconfig.yml

│ │ ├── main.tf

│ └── elb

│ ├── main.tf

└── tests

└── load.rb

🙀

Version Tests

"Treat your tests as any other code"

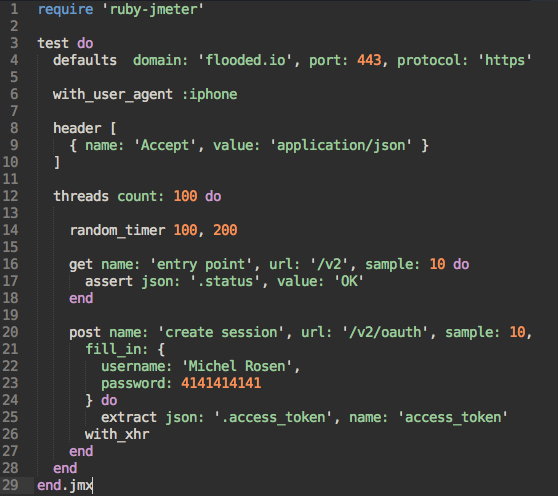

Ruby-JMeter

"Record and replay is b@##$h!t"

github.com/flood-io/ruby-jmeter

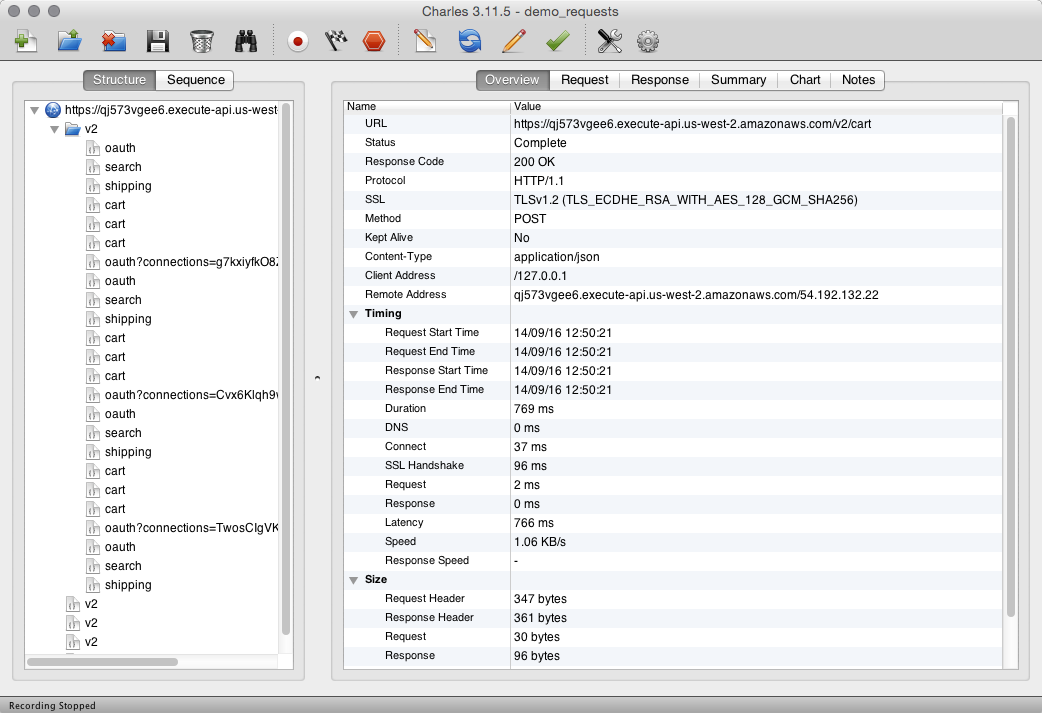

Charles Proxy

Go Deep

"Canary in the mine"

"Halve and halve again"

"Small targeted changes"

"(re)Moving bottlnecks"

"Scalability Curves"

"Scalability Curves"

"Scalability Curves"

Ready Set Test

Model, Measure, Build ... Decide

Reality?

Get inside your OODA loop

Do more load testing

At a fraction of cost

"Today's load test of 30K users, 3M rpm and +2Gbps has cost us $15 per hour"

![]()